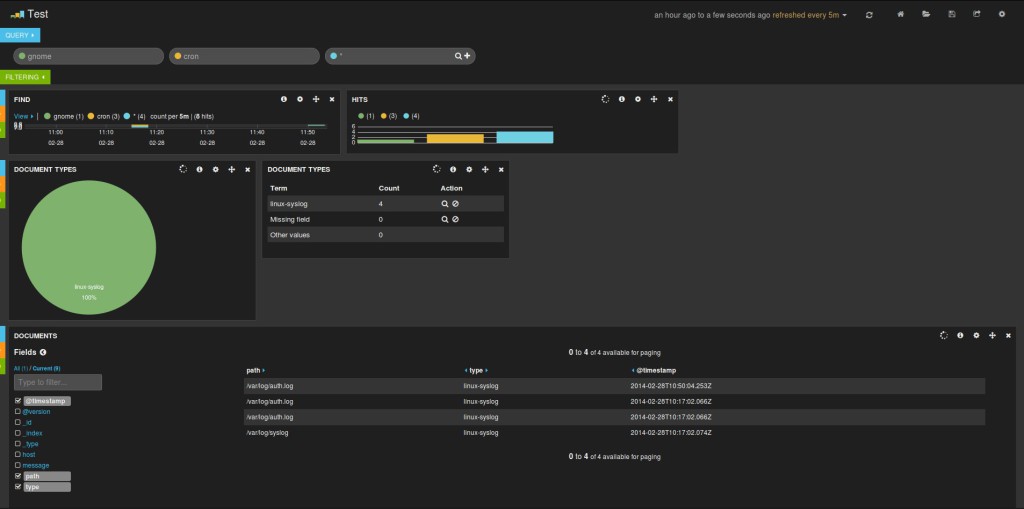

Few months ago, I was looking for information concerning auto-completion with ElasticSearch as a source. I found many solutions, but none of them really fit my needs. Nevertheless, it helped me building my request.

What’s the situation Doc?

I needed to be able to extract values from an indexed field in ElasticSearch according to a group of letters (user input). In fact, my indexed document contains a field named map which is an array of string. The idea is the following: if the user is looking for the value « name » for instance, let’s say he will first type « na ». So here we must be able to suggest searches to the user. Furthermore, with this 2 letters, we must proposed existing fields like « name », « native » or « nature ».

I’m using Elastical to interact with ElasticSearch.

First, I’m building a regex which will be used later in the request.

var search = req.body.searched.toLowerCase(),

firstLetter = search.charAt(0),

rest = req.body.searched.slice(1),

reg = "^["+firstLetter+firstLetter.toUpperCase()+"]"+rest+".*";

The regex is build to match with both an upper case char or a lower case one in first position.

What about the request?

var request = {

query: {

query_string: {

default_field: "map",

default_operator: "AND",

query: req.body.searched+"*"

}

},

facets:{

map:{

terms:{

field: "map.exact",

regex: reg,

size: 10

}

}

}

}

First, I’m asking ElasticSearch to retrieve all documents which match req.body.searched+ »* » where req.body.searched contains the user input. I’ve change the default operator to « AND » rather than « OR » in order to be able to deal with fields like « Nom de la gare » or « Name of the dog ». By default, ElasticSearch uses the « OR » operator, so it will ask for « name » OR « of » OR « the » OR « dog »; which is not what I wanted.

Then, I’m using facets to retrieve values in the field map of found documents matching the given regex. I’m using map.exact for the same reason I must use the « AND » operator.

This request works great with on the tests I’ve made. Remains to be seen if it can handle big indexes.

I can now ask ElasticSearch with Elastical and build a clean response:

elastical.search(request, function (err, results, full) {

var terms = [];

async.forEach(full.facets.map.terms, function(data, callback) {

terms.push(data.term);

callback();

}, function(err) {

res.send(terms);

});

});