On my way to a complete MongoDB monitoring solution, I’ve been playing with mongostat to see what I can achieve with it. So I tested mongostat on a simple architecture made of two shards, each shard being a replica set composed of tree members. Of course, we also have tree configuration routers and one query router.

First, I discovered a few bugs in the tool when using it with the option discover. This parameter can be used to automatically retrieve statistics from all members of a replica set or a sharded cluster. Using it with version 2.4.9 of mongostat causes some other parameters to be ignored: rowcount and noheaders. So I dove in the code on Github to find that this bugs had been already corrected. We just need modifications to come to the stable version.

mongostat --host localhost:24000 --discover --noheaders -n 2 30 > mongostat.log

Here I’m connecting to my query router, asking mongostat to find other mongoDB instances by itself. Options noheaders and n don’t work at the moment but that’s not a problem. With this setup, I will receive logs every 30s.

There are two types of log: the ones coming from the mongos and the ones coming from the other.

localhost:21000 *0 *0 *0 *0 0 1|0 0 800m 1.04g 30m 0 local:0.0% 0 0|0 0|0 198b 924b 13 rs0 PRI 15:36:55 localhost:21001 *0 *0 *0 *0 0 1|0 0 800m 1.01g 29m 0 test:0.0% 0 0|0 0|0 138b 359b 6 rs0 SEC 15:36:55 localhost:21002 *0 *0 *0 *0 0 1|0 0 800m 1.01g 29m 0 test:0.0% 0 0|0 0|0 138b 359b 6 rs0 SEC 15:36:55 localhost:21100 *0 *0 *0 *0 0 1|0 0 800m 1.05g 35m 0 local:0.0% 0 0|0 0|0 198b 924b 13 rs1 PRI 15:36:55 localhost:21101 *0 *0 *0 *0 0 1|0 0 800m 1.01g 34m 0 test:0.0% 0 0|0 0|0 138b 359b 6 rs1 SEC 15:36:55 localhost:21102 *0 *0 *0 *0 0 1|0 0 800m 1.01g 34m 0 test:0.0% 0 0|0 0|0 138b 359b 6 rs1 SEC 15:36:55 localhost:24000 0 0 0 0 0 0 174m 5m 0 2b 23b 2 RTR 15:36:55

Output from mongostat.

The last line coming from the mongos has empty fields. So we will need to deal with it when parsing the log. After having understood how mongostat works, it is now time to see if we can easily plug it in logstash. Let’s take a look at our logstash configuration file.

input {

file {

type => "mongostat"

path => ["/path/to/mongostat.log"]

}

}

We define where to find the log file.

filter {

if [type] == "mongostat" {

grok {

patterns_dir => "./patterns"

match => ["message", "%{HOSTNAME:host}:%{INT:port}%{SPACE}%{METRIC:insert}%{SPACE}%{METRIC:query}%{SPACE}%{METRIC:update}%{SPACE}%{METRIC:delete}%{SPACE}%{METRIC:getmore}%{SPACE}%{COMMAND:command}%{MONGOTYPE1}%{SIZE:vsize}%{SPACE}%{SIZE:res}%{SPACE}%{NUMBER:fault}%{MONGOTYPE2}%{SIZE:netIn}%{SPACE}%{SIZE:netOut}%{SPACE}%{NUMBER:connections}%{SPACE}%{USERNAME:replicaset}%{SPACE}%{WORD:replicaMember}%{SPACE}%{TIME:time}"]

}

}

if [tags] == "_grokparsefailure" {

drop { }

}

if [message] == "" {

drop { }

}

}

We apply filter on each message received if it comes from mongostat. If the message is empty or grok fails to parse it, we drop the log.

output {

stdout { }

elasticsearch_http {

host => "127.0.0.1"

}

}

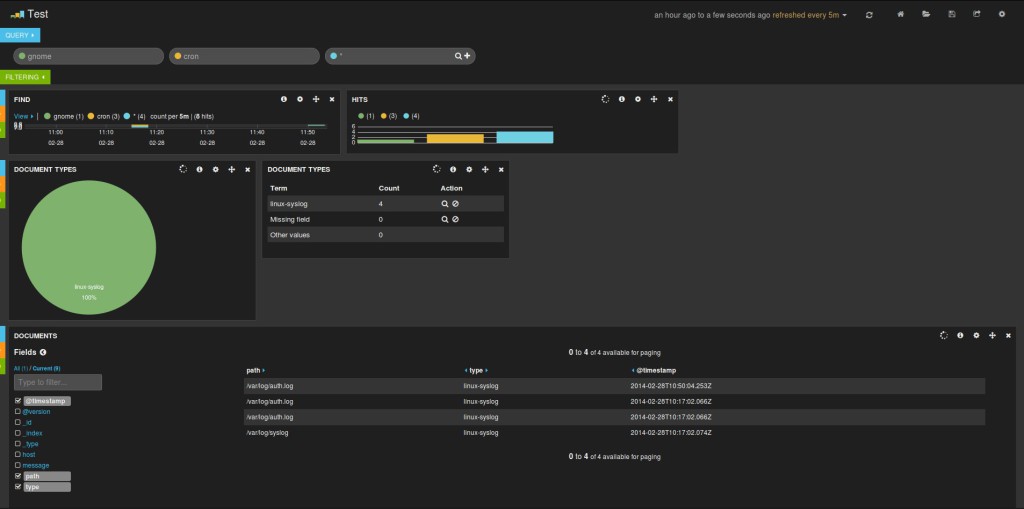

Simple output. Logs are stored in Elasticsearch so that we can use Kibana to examine them later and are written to stdout for immediate debugging and verification.

Let’s consider a little bit the filter part. We give grok a directory for our personal patterns: ./patterns. This directory contains a file mongostat with the following patterns:

METRIC (\*%{NUMBER})|(%{NUMBER})

COMMAND (%{NUMBER}\|%{NUMBER})|(%{NUMBER})

SIZE (%{NUMBER}[a-z])|(%{NUMBER})

LOCKEDDB (%{WORD}\:%{NUMBER}%)

MONGOTYPE2 (%{SPACE}%{LOCKEDDB:lockedDb}%{SPACE}%{NUMBER:indexMissedPercent}%{SPACE}%{COMMAND:QrQw}%{SPACE}%{COMMAND:ArAw}%{SPACE})|%{SPACE}

MONGOTYPE1 (%{SPACE}%{NUMBER:flushes}%{SPACE}%{SIZE:mapped}%{SPACE})|%{SPACE}

The MONGOTYPE patterns are used to deal with empty fields from mongos log line.

The rest of the match directive is just about capturing each field from mongostat to produce a more readable and analysable output.

To create this, I used an online Grok debugger which is very useful because you needn’t to reload logstash every time you want to test your work. It also provides you instant feedback.

I’m know waiting for the bugs to be fixed in stable so that this solution could be more useful to monitor mongo and maybe use it in production.

List of available patterns on Github.