Une astuce très utile, qu’il me faut à présent tester: comment écrire une URL pour que celle-ci utilise le protocole de la page de référence. Simplement en omettant celui-ci avant le // soit:

//domain.comPensées Libres dans un Monde Binaire

Une astuce très utile, qu’il me faut à présent tester: comment écrire une URL pour que celle-ci utilise le protocole de la page de référence. Simplement en omettant celui-ci avant le // soit:

//domain.comPour des besoins de test, et notamment dans le but de mesurer les performances et d’analyser le comportement de Mongo avec différentes quantités de mémoire vive, je me suis penché sur la façon de restreindre les ressources pour un processus donné sous GNU/Linux.

Je me suis d’abord tourné vers ulimit qui semblait permettre de restreindre explicitement la ram, avec en particulier la commande suivante:

ulimit -Hm 30000

Pour la mémoire virtuel, on remplace le m par un v, par exemple:

ulimit -Sv 200000

Cette façon de faire n’était malheureusement pas satisfaisante. En surveillant mon mongo avec mongostat, je constate que celui-ci dépasse largement les limites imposées. Je me tourne donc vers une autre solution: les cgroups.

Reprenons la définition de Wikipédia, les cgroups sont une fonctionnalité du noyau Linux permettant de « limiter, compter et isoler l’utilisation des ressources ». Ça tombe bien, c’est exactement ce que je cherche à faire. En bonus, les cgroups sont utilisés par les LinuX Containers (LXC), procédé de « virtualisation légère », une raison de plus de s’y intéresser.

Revenons à notre problématique de limitation de la ram de mongo. Dans un premier temps, il est nécessaire de préparer l’environnement:

aptitude install libcgroup1

Si le paquet n’est pas disponible sous ce nom là, une simple recherche de cgroup devrait permettre de le trouver. Nous allons tout d’abord créer un nouveau cgroup qui nous permettra d’appliquer les limitations:

sudo cgcreate -t <user> -a <user> -g memory,cpu:<groupname>

Ici, notre cgroup pourra imposer des limites sur la mémoire (memory) et le processeur (cpu). Nous pouvons maintenant définir les paramètres de notre groupe, en terme de mémoire notamment:

echo 33000000 > /sys/fs/cgroup/memory/<groupname>/memory.limit_in_bytes

Ici, nous limitons la mémoire vive à 33 Mo pour notre cgroup. Des raccourcis existent pour l’écriture des quantités d’octets, on pourrait par exemple remplacer 33000000 par 33M. Il ne nous reste plus qu’à démarrer notre processus mongo avec ces restrictions:

cgexec -g memory:<groupname> mongod

En surveillant notre mongo avec mongostat, nous pouvons ainsi constater une augmentation du nombre de « page faults » lorsque nous réduisons la mémoire disponible de manière significative et constater que mongo prend bien en compte la limite.

Voilà donc un court exemple qui permet d’éviter de saturer sa ram en totalité avant de pouvoir commencer des tests sur mongo, notamment en ce qui concerne l’étude du nombre de « page faults » en fonction du working set et de la ram disponible. Ce procédé peut bien sûr être appliquer à d’autres processus pour éviter qu’ils ne consomment toutes les ressources de la machine. Pour de plus amples d’informations sur le sujet, on se tournera vers le wiki Arch Linux ou encore ce guide Red Hat.

Ou pourquoi un mot de passe se doit d’avoir une taille minimum et d’éviter de suivre un motif particulier.

Comme vous le savez certainement, il n’est pas toujours aisé, pour un hacker ayant découvert une faille de sécurité dans un système informatique, de contacter les responsables du système pour le leur signaler. Certaines entreprises, la première me venant à l’esprit étant Google, prennent facilement en compte les alertes (il me semble). Dans d’autres cas, le simple fait de signaler la faille vous assimile à un pirate…

Sur cette petite introduction, je vais maintenant revenir sur une situation à laquelle j’ai été confronté. Pas de faille de sécurité à proprement parler, mais un constat sur la politique de création et d’utilisation des mots de passe d’une plate-forme. Penchons-nous donc sur le problème.

La plate-forme en question n’héberge pas de données personnelles sensibles à proprement parler, pas de numéro de carte de crédit, pas d’adresse. Elle propose néanmoins un compte pour l’utilisateur qui contient donc un minimum d’informations le concernant: ses noms et prénoms notamment. Venons en au sujet qui fâche: le mot de passe d’accès à l’espace utilisateur. Premièrement, il n’est pas possible de le modifier via l’interface. Deuxièmement, et c’est là que certains des lecteurs vont commencer à s’étrangler. Le mot de passe n’est composé que de 5 caractères.

Regardons le nombre de mot de passe différents qu’il est possible d’obtenir si on considère que le mot de passe est généré avec au choix:

* les lettres de l’alphabet latin en minuscule ou en majuscule (52 possibilités).

* les chiffres (10 possibilités).

* les caractères spéciaux comme @ / [ { (34 possibilités environ).

En résumé, pour chaque caractère: 52 + 10 + 34 = 96 possibilités.

Ce qui nous donne donc 96 *96 *96 *96 *96 = 96^5 = 8 153 726 976, un peu plus de 8 milliard de mot de passe différents. C’est peu mais ça reste beaucoup plus élevé que le nombre qui va suivre.

En effet, le massacre n’est pas fini… les mots de passe suivent un motif particulier. Ils sont constitués de l’année de naissance de l’utilisateur avec la première lettre de son nom. En résumé, une lettre et un nombre à 4 chiffres, 10 * 10 * 10 * 10 * 26 soit 260 000 possibilités. Nous sommes loin des 8 milliard initiaux et ça commence à faire peur.

Ce nombre peut encore être réduit, puisqu’on parle d’année de naissance. En considérant un intervalle simple d’une centaine d’année, on est presque sûr d’englober tous les cas possible, à l’exception des personnes plus que centenaire. En définitive, on se retrouve avec grand maximum 100 * 26 = 2 600 mots de passe différents ce qui est tout simplement ridicule.

Alors évidemment, on pourrait me faire remarquer qu’il faudrait connaître l’identifiant du compte pour que cela deviennent dangereux. Effectivement, et dans notre cas, oh malheur! L’identifiant est un nombre à 9 chiffres. A mon sens, la sécurité est insuffisante sur cette plate-forme, à cause d’une politique de génération des mots de passe défectueuse.

Imaginons un instant que l’on puisse tester un mot de passe par seconde… Vous voyez où je veux en venir n’est-ce pas? En moins d’une heure, toutes les possibilités pourraient être essayer. Cela prendrait seulement 43 minutes et 20 secondes pour être précis.

Si j’écris tout cela, c’est pour tenter de montrer combien un mot de passe qui suit un même motif pour tous les utilisateurs est un mauvaise chose et encore plus lorsque la génération se base sur des données personnelles comme la date de naissance. Je peux comprendre une partie des choix qui ont pu mener à une telle situation: facilité de mémorisation pour les utilisateurs, inutilité d’un système de récupération de mot de passe puisque le motif est connu de tous, place réduite en base de donnée. Quand bien même, tous ces arguments me semblent insuffisants pour justifier une telle politique de sécurité, d’autant plus lorsqu’on manipule un minimum de données personnelles.

Une petite astuce trouvé sur le net pour disposer d’un bloc-note dans le navigateur en utilisant simplement du html. Il suffit d’utiliser le code suivant dans la barre d’adresse du navigateur:

data:text/html, <html contenteditable>

Un petit marque-page et le tour est joué.

J’utilise personnellement une version légèrement personnalisée avec texte blanc sur fond noir:

data:text/html, <html contenteditable style="color:white; background-color:black">

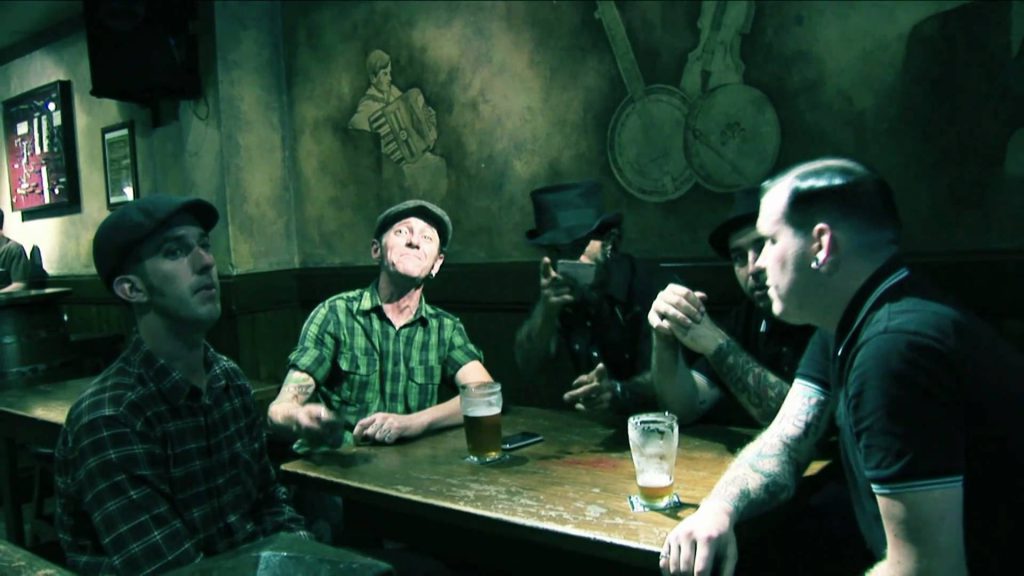

Musique très dynamique pour ce groupe australien The Rumjacks. Au programme: du celtique, du folk et du punk. Un album à leur actif, Gangs Of New Holland, savant mélange de tous ces genres. Sans oublier un banjo endiablé.